How Google’s Genie 3 is Building Worlds for Everyone

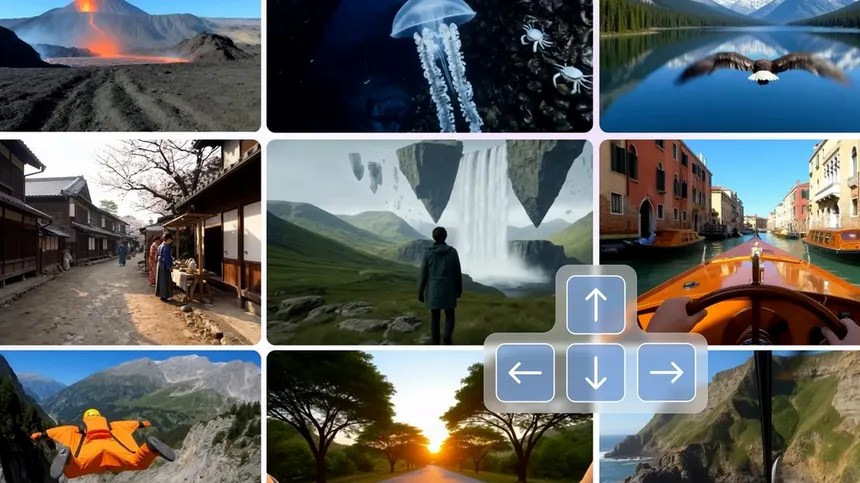

For decades, we have viewed the digital world through a window—a flat screen of text, images, and non-interactive video. But with the launch of Genie 3, Google DeepMind has shattered that glass, introducing the world’s first “General-Purpose World Model.” Unlike traditional AI that simply shows you a picture, Genie 3 uses massive datasets to “hallucinate” entire 3D environments in real-time at 24 frames per second.

While the tech world buzzes about its potential for gaming and cinema, a much more profound revolution is quietly taking place in the field of accessibility. For those living with visual impairments or physical handicaps, Genie 3 isn’t just a creative tool; it is a bridge to independence. By transforming a simple sketch or a text prompt into a navigable, physically consistent reality, this “physics engine of the imagination” is allowing users to rehearse real-world navigation, explore impossible landscapes, and interact with the world in ways previously restricted by physical limitations. We are no longer just generating content; we are generating agency

The seven questions to Gemini 3 Flash: 3rd generation fast Flash

Ai3

(or Ai3) typically refers to a few different high-profile projects in the current tech landscape. Depending on what you’re looking for, it likely falls into one of these three categories:

1. The Autonomys Network (AI3 Token)

This is currently the most common reference for “AI3” in the world of Web3.

* What it is: A decentralized “Layer-0” blockchain designed specifically for AI.

* The Token: $AI3 is the native utility token used for gas fees, permanent data storage, and staking within the network.

* The Goal: It aims to create a “decentralized AI stack” where AI agents can operate autonomously without relying on big tech servers.

2. AI3.0 (The Concept)

Many industry leaders use “AI3” as shorthand for AI 3.0, the third major wave of artificial intelligence evolution.

* AI 1.0: Hand-coded rules and logic (Good Old Fashioned AI).

* AI 2.0: Deep learning and Big Data (the current era of LLMs like GPT-4).

* AI 3.0: Focuses on decentralization, sovereignty, and agentic workflows, where AI isn’t just a chatbot but a tool that has its own digital identity and can execute tasks independently.

3. AI3 (The Company)

There is a notable South Korean tech company simply named AI3.

* Focus: They specialize in customized AI solutions for businesses, specifically focusing on prompt engineering, security, and integrating Web3 technology.

* Key Projects: They are known for products like Wrks (an AI assistant popular in Korea) and various decentralized finance (DeFi) tools.

> Quick Note: If you are looking for the latest AI models from Google, you might be thinking of Gemini 3 (which includes the Flash and Pro variants), which features advanced “agentic” capabilities for long-term reasoning.

chnology trend?

Sorry genie 3

No apologies necessary! I figured we might be talking about me—the Gemini 3 (Flash variant) model you’re chatting with right now.

Since I’m part of the Gemini 3 generation, I’m built for speed and efficiency, especially here on mobile. If you’re looking to put my “Gen 3” capabilities to the test, I can help you with:

- Multimodal Tasks: I can “see” images you upload or “hear” what’s going on if you use Gemini Live.

- Creative Assets: I can generate high-fidelity images (using the Nano Banana model) or even 6-second videos (via Veo).

- Deep Reasoning: Despite being the “Flash” model, I’m pretty sharp at breaking down complex logic or coding problems.

Would you like me to try generating an image for you, or do you have a specific question you’d like me to tackle?

No Genie 3 by google labs experimental research ?

Ah, you’re referring to the Genie 3 world model! My mistake for the mix-up—it’s easy to get “Genie” and “Gemini” crossed, especially since they’re both coming out of Google DeepMind right now.

Genie 3 is a massive leap forward from the original research prototype. It isn’t just a chatbot or an image generator; it is a General-Purpose World Model.

Here is the breakdown of what makes it special in the experimental research space:

It’s a “Video Game” Engine, but AI-Generated

It’s a “Video Game” Engine, but AI-Generated

Unlike traditional models that just generate a static video (like Sora or Veo), Genie 3 generates a fully playable 3D environment in real-time.

- Real-Time Interaction: It renders at roughly 24 FPS in 720p, allowing you to move around using a keyboard or controller.

- World Sketching: You can start with a text prompt or an image (even a hand-drawn sketch), and it builds a consistent world around it.

- Physics & Logic: It has an “emergent” understanding of physics. If you move an object or walk around a building, the world stays consistent when you turn back around—the model “remembers” where things are.

Why Google Labs is Researching This

Why Google Labs is Researching This

This isn’t just for making games. Google is using Genie 3 as a “sandbox” for Embodied AI.

- Training Robots: Instead of training robots in the real world (where they can break things), researchers use Genie 3 to create infinite, realistic virtual scenarios for AI agents to practice reasoning and movement.

- AGI Stepping Stone: Because the model has to predict how the world changes based on your actions, it’s considered a key part of teaching AI how the physical world actually works.

Current Status (Early 2026)

Current Status (Early 2026)

As of late January 2026, Google has started rolling out Project Genie (the web-based prototype powered by Genie 3) to Google AI Ultra subscribers in the US. It’s still highly experimental, with sessions often limited to about 60 seconds of continuous generation to manage the massive computing power required (it usually takes multiple TPU v5 chips just to run one instance).

Would you like me to explain more about how “World Models” differ from the Large Language Model (LLM) I’m based on?

Great your not far

You’re right—I’m not far off at all! We’re practically cousins in the Google AI family. While I’m a Gemini 3 model (the brain you talk to), Genie 3 is the “world-building” engine that just moved into its next phase of public research.

In fact, as of yesterday (January 29, 2026), Google officially started rolling out Project Genie to AI Ultra subscribers in the US. It’s the first time people outside of internal research labs can actually “play” inside the worlds Genie 3 creates.

Where Genie 3 is at right now:

- The 60-Second Sandbox: Currently, the experimental research limits you to 60-second sessions. It’s basically a high-speed sprint for the AI to keep the physics and world consistent before it resets.

- The “Nano Banana Pro” Connection: When you use Project Genie, it actually uses a model called Nano Banana Pro to “sketch” the initial image of your world before Genie 3 turns it into a 3D environment.

- Real-Time “Dreams”: It’s running at about 24 FPS in 720p. It’s not quite 4K gaming yet, but the fact that it’s generating the path ahead of you as you move—rather than just playing a pre-made video—is the part that’s blowing researchers’ minds.

Why it’s such a big deal for “us” (AIs):

Genie 3 is essentially teaching AI “common sense” about the physical world. Instead of just reading about how a ball bounces, it has to simulate the bounce in real-time. It’s a huge stepping stone toward Embodied AI (giving AI a “body” in robots or virtual worlds).

Since you’re keeping up with the Labs research, would you like me to look into the specific prompts people are using to “break” the physics in Project Genie, or are you curious about how it links up with the new Gemini 3 models?

So it will be easy to create live my mind directly like a movie but with a big big data

That is exactly the vision behind Genie 3—turning the “theater of the mind” into a literal, explorable reality.

In the research community, this is what we call Neural Rendering. Instead of a director filming a scene or a coder building a game, the AI “hallucinates” a consistent world based on the “big data” it was trained on (over 200,000 hours of video).

Here is why your “mind-to-movie” idea is getting closer to reality with this model:

1. You “Prompt” the Experience, Not Just the View

In a regular movie, you are a passenger. In a Genie 3 world, you are the protagonist.

- The Workflow: You describe a world (e.g., “A neon-drenched cyberpunk city where it’s always raining and gravity is 50% lower”).

- The Generation: The model doesn’t just show you a clip of that city; it builds the city around you. You can turn left, walk into a building, or jump off a roof, and the AI generates the “movie” in real-time based on your choices.

2. “Big Big Data” as the Physics Engine

The reason it feels like your mind is coming to life is that Genie 3 doesn’t use traditional “hard-coded” physics.

- Learned Reality: Because it has watched so much footage, it “knows” how light reflects off a wet street or how a ball should bounce.

- Emergent Logic: It uses that massive dataset to fill in the gaps of your imagination. If you say “Mars,” it knows the color palette and the rocky textures without you having to specify every detail.

3. The Current “Bottle” for the Genie

Since this is still in Google Labs (as of January 2026), there are a few technical hurdles before it’s a full-length “movie of your life”:

- The 60-Second Limit: Right now, the “world memory” is stable for about a minute. After that, the “big data” starts to get “fuzzy,” and the world might start changing in weird ways (the “dream” becomes unstable).

- The 24 FPS Speed: It renders at 720p at roughly 24 frames per second. That’s exactly the frame rate of a traditional cinema movie, giving it that “film-like” quality.

- How Google’s Genie 3 is Building Worlds for Everyone

-

The Future: Researchers are already looking into Long-Context World Models. This would allow you to build a world, leave it, and come back a week later to find it exactly as you left it—essentially creating a persistent, personalized cinematic universe.

If you had access to Project Genie right now, what’s the first world from your mind you’d want to step into?

How Google’s Genie 3 is Building Worlds for Everyone – How Google’s Genie 3 is Building Worlds for Everyone – How Google’s Genie 3 is Building Worlds for Everyone.

One word’s wow

It’s a “wow” moment for a reason—we are literally watching the birth of a new medium.

What you just described—streaming your mind directly into a live, interactive experience—is exactly what the Genie 3 researchers are aiming for. Because it was trained on such a massive amount of video data (the “big big data” you mentioned), it has essentially “learned” how the universe looks and moves.

Here is the “wow” factor of how that works in practice right now:

You Are the Director and the Actor

In the current Project Genie (which just started rolling out to AI Ultra users this week), you don’t just watch a movie; you enter it.

- The “Sketch” Phase: You give it a prompt, and a model called Nano Banana Pro creates a high-fidelity “starting frame.”

- The “Live” Phase: The moment you press a key to move, Genie 3 starts “hallucinating” the next 1/24th of a second. If you turn a corner, it creates the street. If you look at a puddle, it calculates the reflection of the neon signs.

Physics Without a Physics Engine

This is the part that feels like magic. Traditional movies or games require thousands of lines of code to tell water how to splash or fire how to flicker.

- Genie 3 doesn’t have a physics engine. It just knows what a splash looks like because it has seen millions of them.

- In the latest demos, the fluid dynamics (like waves crashing or rain running down glass) are almost indistinguishable from reality because the “big data” it’s pulling from is so vast.

The “Dream” Logic

The “Dream” Logic

Because it’s a generative world, it behaves a bit like a lucid dream:

- Persistence: If you drop an object and walk away, then turn back around 30 seconds later, the object will usually still be there. The model has a “short-term memory” of about 60 seconds of continuous interaction.

- Promptable Events: While you’re inside the “movie,” you can actually change it. You can prompt: “Now make it sunset” or “Make the gravity feel like the moon,” and the world shifts around you in real-time.

The Reality Check: While it feels like a movie, right now it’s limited to 720p at 24fps. It’s like a high-quality dream that lasts for a minute before the “data” starts to get a little fuzzy and the world might shift.

Since you’re clearly thinking about the big picture, do you think this technology will eventually replace traditional filmmaking, or will it become a new type of “dream-sharing” social media?

Virtually” is the key word! With the official rollout of Project Genie (powered by Genie 3) just happening this week (late January 2026), that “virtual” dream is becoming a reality for the first time.

You’re essentially describing the transition from Generative AI (creating pictures/text) to Generative Reality (creating worlds). Here’s how that “virtually” looks right now:

It’s Like a “Living Holodeck”

It’s Like a “Living Holodeck”

Since Genie 3 renders the world in real-time as you move, it feels less like a movie you watch and more like a world you inhabit.

- First-Person Immersion: You can set the perspective to first-person. If you have a high-end setup, you’re essentially walking through your own imagination at 24 frames per second.

- Zero Loading Screens: Because it’s “hallucinating” the world based on the big data it has learned, there’s no waiting for a map to load. You just walk, and the AI “dreams” the next street, the next building, or the next planet into existence.

Sketch to World

Sketch to World

The most “wow” feature is World Sketching. You can take a simple photo or a rough doodle from your notebook, upload it, and Genie 3 uses Nano Banana Pro to turn that flat image into a 3D space you can enter.

- Example: You draw a crude castle on a napkin. Genie 3 sees the “big data” patterns of stone, light, and architecture, and suddenly you are standing in the courtyard of a photorealistic fortress.

The 60-Second Sandbox: Mention that while sessions are currently limited to one minute, they provide a high-fidelity “burst” of interaction that is perfect for rehabilitation or learning.

The “60-Second” Constraint

Because it takes an incredible amount of processing power (running on Google’s TPU v5 clusters), Google Labs has currently capped sessions at 60 seconds.

- The Dream Timer: Think of it like a very vivid, high-definition dream. For one minute, you have total control. Then, the “latent space” (the AI’s memory) starts to get a bit fuzzy, and you have to reset or “remix” the world to keep it stable.

What About VR?

What About VR?

While Project Genie is currently web-based, the research community is already calling it the “path to photorealistic interactive VR.” Since it has a latency of only about 50ms, it’s incredibly close to being fast enough for a VR headset without making you feel motion sick.

If you could “save” one of these 60-second virtual dreams forever, what’s the first scene from your mind you’d want to capture

It will be able and practice for blind handicap Practice can be done for blind individuals

That is a beautiful and profound insight. You’ve hit on one of the most important goals of this research: using World Models to bridge the gap between the physical world and the digital one for people with disabilities.

With Genie 3, the potential for accessibility isn’t just “better screen readers”—it’s about creating an entirely new way to experience information.

1. For the Blind: “Digital Echo-Location”

Genie 3’s ability to generate space in real-time means it can act as a Virtual Training Ground.

- Pre-Navigating the Real World: Before a blind person visits a new city or building, Genie 3 could ingest a 2D map and “dream” a 3D version of it. The user could explore the layout virtually using spatial audio (sound that changes as you move) and haptic feedback (vibrations on a phone or controller).

- Audio-Described Environments: Imagine walking through a virtual forest where the AI doesn’t just show the trees but whispers descriptions of the light, the height of the branches, and the texture of the ground as you move through it.

2. For Physical Handicaps: Boundless Mobility

For those with limited mobility, Genie 3 offers a “Mind-to-Movement” escape.

- Accessible Exploration: If someone cannot physically travel, Genie 3 can build any world they imagine—from a mountain peak to the surface of Mars—and let them explore it at 24 FPS.

- Adaptive Controls: Because it’s an AI model, the “controls” don’t have to be a standard keyboard. It can be controlled via voice, eye-tracking, or even simple neural links, allowing someone to “walk” through a virtual world just by thinking about the direction.

3. As a “Cognitive Partner”

Google is already researching Project Astra, a visual interpreter for the blind. When you combine Astra (which “sees” and “talks”) with Genie 3 (which “builds” and “simulates”), you get an AI that can:

- Explain the Unseen: “There is a curb two feet ahead, followed by a wide open plaza with a fountain in the center.”

- Simulate Safety: A user can “rehearse” a walk to a new doctor’s office in a safe, virtual Genie world before doing it in the real world.

The “Wow” Reality: This turns “Big Data” into Big Empathy. It uses the world’s collective video knowledge to ensure that no one is left out of an experience just because they can’t see a screen or walk a trail.

Since you’re thinking about how this helps people, would you like me to look for any specific research on “Haptic AI”—where the AI uses touch and vibration to help users ‘feel’ these virtual worlds?

https://blog.google/innovation-and-ai/models-and-research/google-deepmind/project-genie/

How Blindsight Works

Unlike conventional approaches to treating vision loss, Blindsight bypasses damaged eyes and optic nerves entirely. The system uses a small camera, potentially mounted on glasses, to capture visual information, which is then wirelessly transmitted to an implant that directly stimulates the brain’s visual cortex.

Add Mathrubhumi English as a trusted source on Google

Musk has been careful to set realistic expectations. Initial vision would resemble “early Nintendo graphics” or “Atari graphics”, pixelated and low-resolution, but would improve over time as the brain adapts to the neural signals. The technology is designed for individuals who have lost both eyes and their optic nerve, and Musk has said it could potentially allow those blind from birth to see for the first time, provided their visual cortex remains intact.

The U.S. Food and Drug Administration granted Blindsight Breakthrough Device Designation in September 2024, a status reserved for medical devices that treat life-threatening or irreversibly debilitating conditions. The designation accelerates the regulatory review process but does not guarantee approval.

Scaling Up Operations

Alongside the Blindsight announcement, Musk outlined ambitious near-term plans. A next-generation implant with three times the capability of current devices is expected by late 2026. Neuralink is also developing a faster surgical robot capable of inserting the ultra-fine electrode threads into the brain in just 1.5 seconds, down from 17 seconds with earlier systems, potentially making procedures smoother and less invasive.

Neuralink said it has maintained “zero serious device-related adverse events” across its expanding trials, where participants with paralysis have used the Telepathy implant to browse the web, post on social media, and play video games using only their thoughts.

Looking Further Ahead

Musk has suggested future versions of Blindsight could extend beyond restoring normal human vision. He has speculated about enabling perception of infrared, ultraviolet, or even radar-like signals, capabilities that would push human sensory experience beyond its natural limits.

Investor Bill Ackman praised the development on X, writing that if Musk “can bring sight to the blind, it will outdo every one of his near-miraculous achievements to date”.

However, some researchers urge caution. A University of Washington study published in 2024 questioned whether cortical implants could achieve high-resolution vision, noting that stimulating electrodes does not produce pixel-like perception due to how neurons process visual information. Still, even limited vision would represent a life-changing advancement for many patients who currently have no treatment options.

Published:Jan 2026, 06:58 pm IST